In digital cameras, the image sensor, a solid-slate device, is the equivalent of a "film". The sensor are very small silicon chips made up of millions photosensitive diode, also called photosites, photodiode or photoelements. The light (the photons) that strike the sensor are the converted in their digital counterparts, the pixels. Pixels are also often referred as photosites, although it is not technically correct.

How does it work?

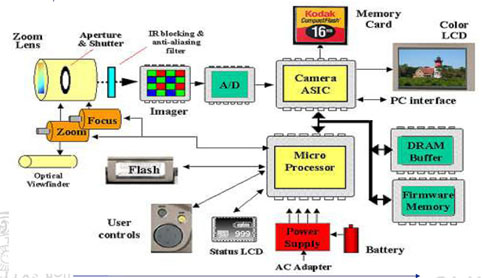

When you trigger the shutter button on your Digital camera, each photosite will collect the intensity of the light (the amount of photons). More light a photosite will collect, higher will be its electric charge. The electric charge is then transformed into a digital number (a digital signal) by the Analogic to Digital Converter (ADC). The digital signal is then tranformed by the microprocessor and stored on the physical support (i.e., the XX, Compact Flash card). In some camera, the signal coming from the ADC is further improved by a Digital Signal Processor to remove the background noise.

How the color is captured?

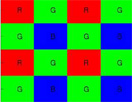

The photosites can only record the brightness (intensity) of the light, but not the colour. Color is then captured by placing a red, a green or a blue (RGB) filter on each site. To produce the final colour image, the filter are disposed in a matrix (the Bayer Matrix, figure 2) and by interpolation of the neighboring pixels, the actual color of each pixel is calculated. A company, Foveon has developped a new technology which consist in placing the 3 different captors on top of each other, thus enabling to collect accurately the colour of each pixel. Sony is using now a modified bayer matrix by adding an additional color filter. They are claiming that this new technology is increasing the colour accuracy.

|

Figure 2: The Bayer matrix is a defined pattern of blue (B), green (G) and red (R) filter. Each site is surrounded by sites of complementary colour (i.e. red and green for a blue site). |